My GEO process (if I ever did one)

Updated : 16/01/26

GEO (for Generative Engine Optimization) is the recently popular term for optimizing the visibility of a Brand or Product on AI chatbots.

I don’t do GEO, but if I had to, here’s what I’d do (based on 150+ hours of Training and R&D on AI and RAGs).

1 – Audit the current state of visibility

- Check if, outside of RAG’s systems, LLMs know my brand.

- Analyze the frequent terms that come up when I ask for a description of my brand

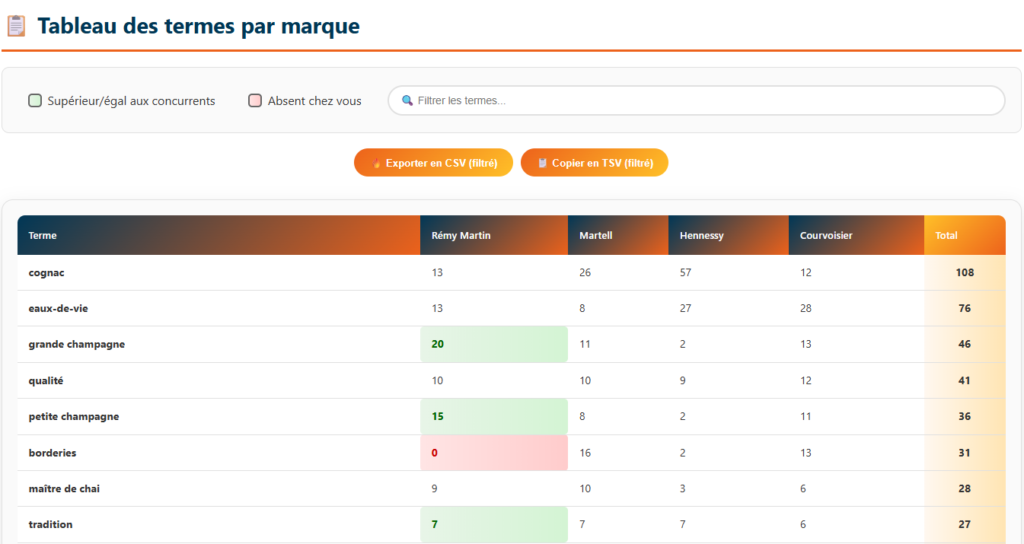

- Analyze the gap in frequent terms vs. competitors to spot strengths/weaknesses and figure out the right communication angle. I’ve got a tool that does this automatically as the previous steps.

- Run these frequent term tests not only on the latest models but also on older ones and keep track to monitor evolution

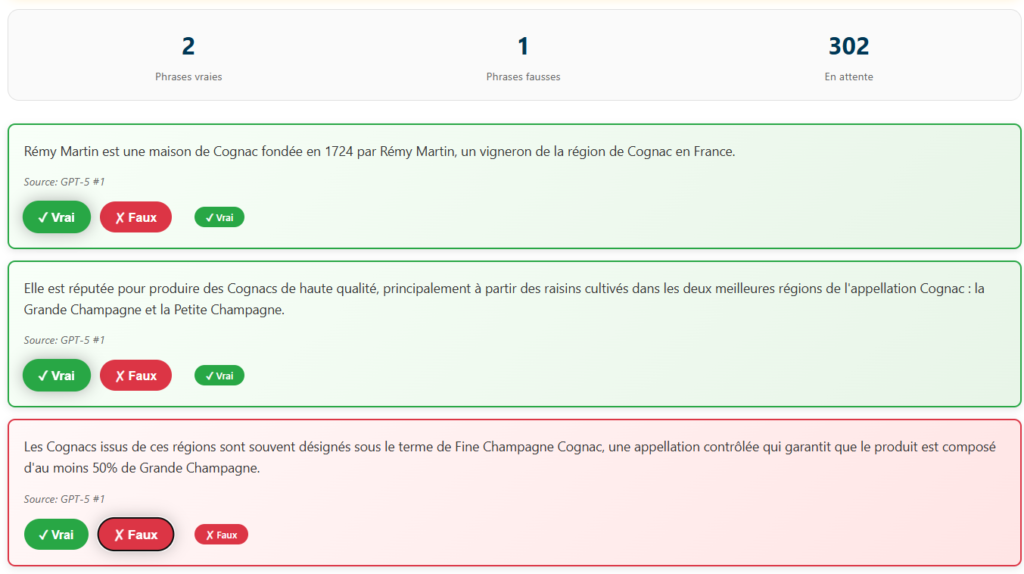

- Analyze the generated phrases used to describe my brand and list the inaccurate ones to know the kind of mistakes my persona might see about my brand (same here, I’ve got a tool to help with that)

- Check if Common Crawl has recently added my site to its database (through their site : https://index.commoncrawl.org/)

- Generate a top 10/15 list of the most likely brands LLMs would suggest for a given product/service with an estimated probability % (don’t have a tool for this yet, but it’s in my Todo List lol)

I put knowledge in quotes because, strictly speaking, an LLM doesn’t actually have any.

2 – Technical Audit

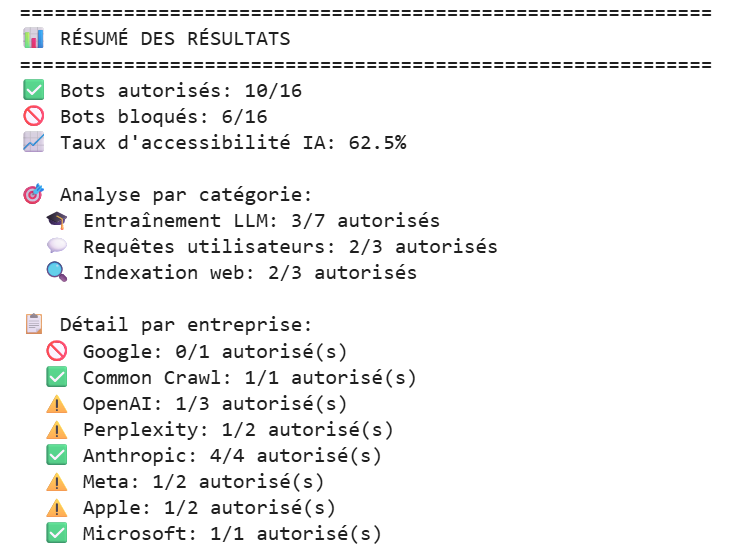

- Are AI crawlers actually able to access my site, or are they getting blocked by something like robots.txt or a firewall ? I’ve got a tool that automatically checks this against all the major LLM’s crawlers user agents. If you want me to test a site for you, just hit me up (LinkedIn works fine).

- Run a task with an AI agent (like filling out a form or adding a product to the cart) to make sure it can actually complete the action. AI agents browsing the web on our behalf are already in development, and they might well be part of the future of the internet, so this isn’t something to ignore.

- Make sure there are no spider traps on your website (especially if you run an e-commerce site). This issue can occur quite often when filters aren’t working properly.

A spider trap happens when a broken link leads to another broken link, which leads to another bugged page, and so on. The crawler ends up stuck in an endless loop of useless, broken, or error pages.

You need to be extremely careful about this, because, as Kevin Lesieutre demonstrated in his excellent conference (which I highly recommend), he presented a case study of an e-commerce site where the crawler encountered a spider trap and eventually stopped crawling the website altogether. After repeatedly hitting broken pages, the crawler concluded that the site wasn’t worth crawling and considered it low quality.

So, it’s definitely worth running a crawl beforehand to make sure there are no spider traps. And if you’re managing an e-commerce website, be especially vigilant about the filters on your product listing pages.

- Some chatbots, like ChatGPT, don’t handle JavaScript rendering, so make sure your content is accessible without JavaScript. To test this, don’t hesitate to ask ChatGPT to summarize your page or ask it questions about the content to see what it’s able to understand.

3 – Map Out Query Fan Outs

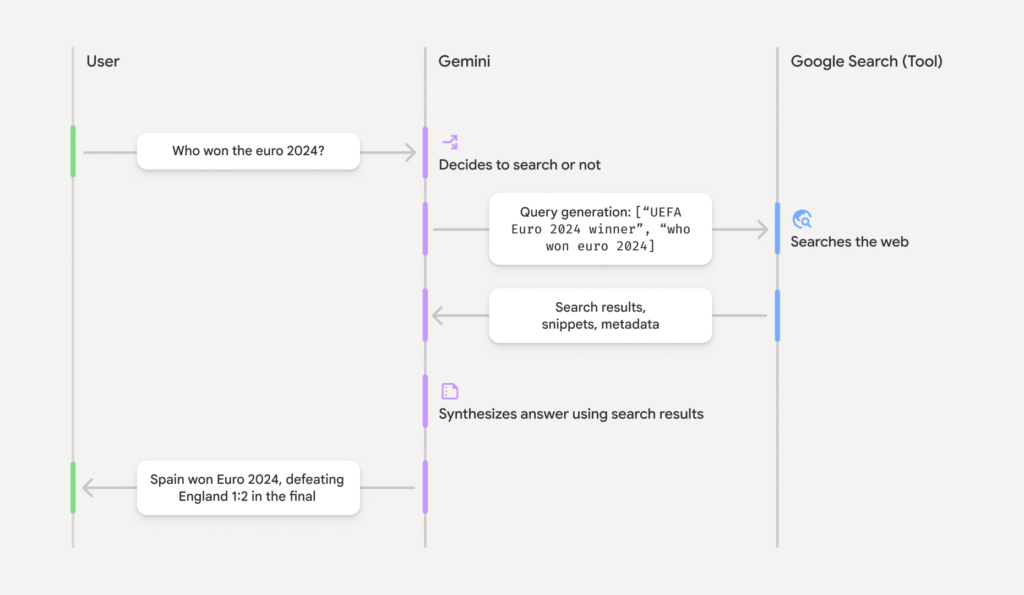

Query fan-outs are background queries that AI chatbots run on search engines like Google or Bing to retrieve information.

Example: If a user enters the prompt ‘Recommend me a vegan and LGBT-friendly restaurant in Lyon’, the LLM might then search for ‘best vegan restaurant Lyon’ and ‘LGBT-friendly restaurant Lyon’. »

- Bulk collect as many search needs of the persona as possible (via PAA, Reddit questions, keywords, etc.).

- Turn those search needs into prompts, making personalized variations with my brand’s personas.

- With your list of keywords and also the different personas you’ve found, discover all the prompts potentially typed by your users by using the following prompt on the LLM of your choice:

If I was [your persona] trying to find [your keywords or questions] what might I ask?

- Run all those prompts multiple times on AI Mode / Gemini / ChatGPT to grab the query fan outs in bulk, then cluster them (you can use dataforseo to get QFO from chat gpt in bulk). Finally, list out the query fan outs where I’m not ranking in the top 10 on Google / Bing — that becomes a content roadmap. (I’ve got a tool to pull query fan outs from Gemini and ChatGPT, check out my tutorials posted on Linkedin to try them.

Btw some prompts will not recquire the chatbot to generate Query Fan Outs. So try to clean your list of prompts before checking their query fan outs.

Does your prompt générate query fan out ? Here’s how to check :

- For Chat GPT : Try the open ai grounding tool made by dejan

- For Ai Mode / Ai overview / Gemini : Try the Google Grounding Api (if you dont get any query as an output it mean that your prompt doesnt recquire query fan out).

4 – Content Creation

- I’ll do both internal and external content creation since chatbots tend to favor earned stuff according to this research paper.

- A classic move: make sure your brand shows up in as many ranking / “top” / « best X to do Y » list articles as possible.

- From my list of query fan-outs (but only the ones I know generate outputs mentioning brands), I check the rankings on Google (and maybe also Bing, which I don’t think should be written off), and I reach out to the sites that are already ranking to ask them to add a mention of my brand. Having done this before, I know it’s very time-consuming, so we reserve this action for the query fan-outs we consider highly prioritized or lucrative.

- If some query fan-outs are topics that can be covered on my site, then create one page per prompt (that page will be optimized for all the query fan-outs generated by that prompt).

- Sometimes, some prompts will generate query fan-outs in English, even if the language of my site or the prompt itself is different. In that case, you’ll need to either create external content on English-language websites or develop an English version of the site to target those English query fan-outs.

- I’d especially focus on BOFU content. For example, an article like “My review of site X: is it trustworthy?” ranks easily on Google/Bing and also gets pulled by ChatGPT when someone asks about the reliability of an e-commerce site before buying. I’ve tested it : it works well, and I think AI can serve as the final step for the persona before conversion. So you might as well control the narrative.

- Keep pushing a quality content strategy that covers the entire topic. If your pages aren’t more “valuable” than basic AI summaries, then honestly your site has no reason to exist. We actually published an article with Paul Grillet that breaks down our process and method to create outstanding content.

- If you’re running an e-commerce business, make sure your product feed and visibility on Google Shopping are up to date and working properly. Indeed, we know that ChatGPT relies on Google Shopping to power its own ChatGPT Shopping display.

- If your running an e-commerce, the way your writing your product page’s descriptions are also important, here’s a breakdown of an arxiv paper that explain how to do it.

5 – Measure

- Check the AI logs (especially the ones triggered by a user request) to get an idea of how many times your content is being pulled as a source, and which content specifically. I’ve got a Linkedin post listing all the logs worth tracking.

Recently, we found the following UserAgent : GoogleAgent-URLContext , which seems to help Gemini access the content of a URL directly without going through its search engine or through Google. Monitoring this UserAgent therefore makes it possible to know that someone using Gemini has requested information about this URL. More info about url context api here.

- Here are the interesting and actionable insights you can uncover by analyzing the AI logs collected on your server (I stole them from Jerome Salomon) :

- Check for 499 errors in the logs: that’s when ChatGPT decides to cut its visit short because your site took too long to load (so yeah, time to revisit TTFB or other webperf issues).

- Even if it’s marginal, keep an eye on traffic from AI chatbots by adding this regex into your analytics tool.

^.*ai|.*\.openai.*|.*copilot.*|.*chatgpt.*|.*gemini.*|.*gpt.*|.*neeva.*|.*writesonic.*|.*nimble.*|.*outrider.*|.*perplexity.*|.*google.*bard.*|.*bard.*google.*|.*bard.*|.*edgeservices.*|.*astastic.*|.*copy.ai.*|.*bnngpt.*|.*gemini.*google.*$- Measure the number of clicks coming from AI Overviews by setting up a JavaScript variable in Google Tag Manager (check out the tutorial for how to do it). This tracking relies on the parameter used by AI Overviews, which (as far as I know) is always included in the sources‘s urls. That said, this should be confirmed, since I haven’t personally dug into AI Overviews for a while (it’s still not rolled out in France 😅).

At the end of the day, you’ve got to understand that GEO is way more about branding than traffic acquisition. The goal is to get your brand mentioned, not to expect people to click through to your site (they basically never do).

So apart from monitoring direct traffic or branded searches in search engines (which could just as well come from your overall comms efforts), it’s basically impossible to measure the direct business impact of your GEO strategy.

If you feel overwhelmed by “GEO,” before rushing into offering a half-baked service, I can only recommend one thing: seriously educate yourself. Yes, it takes time, but if you want to be an honest, trustworthy service provider, it’s the baseline.

Here are two of my LinkedIn carousels that give a lot of insight into how AI Search and AI in general work — a good starting point, but obviously not enough to fully master the topic. Sorry it’s in french.

Ian Sorin is an SEO consultant at Empirik agency in Lyon, France. Passionate about search engine and LLM algorithms, he actively monitors patents, updates, and research papers to better understand possible manipulations on these systems. In his spare time, he develops tools to help automate analysis tasks and regularly conducts tests on personal projects to discover new GEO and SEO tricks.