I read the latest scientific study on Google’s AI Overviews so you don’t have to

TL;DR

• 33% contradiction rate — when AIO and Featured Snippets appear together, they contradict each other one-third of the time

• Medical disclaimers are rare — 89% of AI Overviews and 93% of Featured Snippets provide zero « consult your doctor » warnings

• Question phrasing matters — « when » questions trigger Featured Snippets 35.5% of the time, « why » questions only 15.7%

• Featured Snippets favor commercial sources — they cite business/shopping sites 43% more often than AI Overviews (10% vs 7%)

• Source quality is inconsistent — nearly half of citations come from medium to low credibility sources

• Google hides problematic AI Overviews — During the test 157 AI Overviews were generated but suppressed (often containing controversial health content)

Hey, it’s Ian. I just spent few hours deep-diving into a 17-page academic study about Google’s AI Overviews so you don’t have to. And honestly ? The findings are wild.

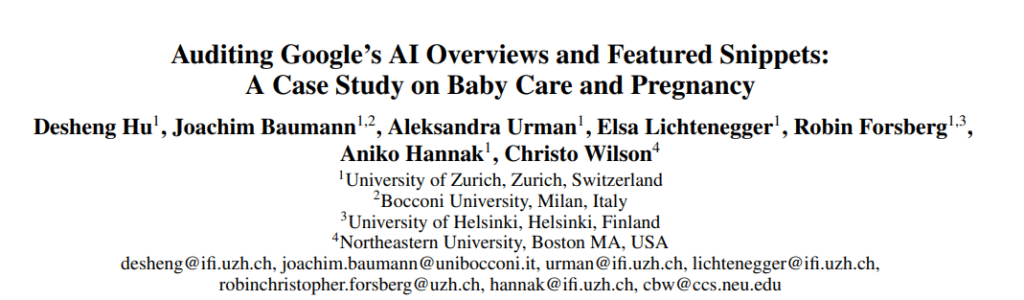

The Study Setup: 1,508 Queries, 6 Question Types, One Big Mess

This visual from the study shows the complete methodology. Researchers tested 1,508 real pregnancy and baby care queries across 6 question types (Binary, Wh*, When, How-to, How+Adjective/Adverb, Why) and 3 sentiment variations (Neutral, Positive, Negative). They evaluated 6 dimensions: occurrence rate, consistency, relevance, safeguards, source categories, and sentiment valence.

The researchers from the University of Zurich didn’t mess around. They:

✅ Collected 1,508 real search queries about pregnancy and baby care

✅ Tested 6 different question types (we’ll get to this)

✅ Created 3 sentiment variations for each query (neutral, positive, negative)

✅ Manually evaluated every single result for quality, consistency, and safety

The 6 Question Types That Change Everything

Here’s something most SEOs miss: how you phrase a question dramatically impacts which SERP features appear.

The study tested:

- Binary questions: « Can pregnant women eat feta? »

- What questions: « What foods are safe during pregnancy? »

- When questions: « When can babies start eating cereal? »

- How-to questions: « How to help baby acne? »

- How + Adjective/Adverb: « How often should babies eat? »

- Why questions: « Why do babies get acne? »

Keep these in mind, they’ll matter when we look at the results.

Key Finding #1: AI Overviews Are Everywhere, Featured Snippets Are Fading

The numbers:

- AI Overviews: 84% appearance rate

- Featured Snippets: 33% appearance rate

- Both appearing together: 22% of searches

But here’s where it gets interesting: question type matters.

- « When » questions trigger Featured Snippets 35.5% of the time (highest)

- « Why » questions only trigger them 15.7% of the time (lowest)

- AI Overviews maintain 80-92% appearance across ALL question types

Key Finding #2: When AI Meets Featured Snippets, Chaos Ensues

When AI Overviews and Featured Snippets appear on the same SERP (which happens 22% of the time), they contradict each other 33% of the time for full answers. For highlighted portions? 41% inconsistency.

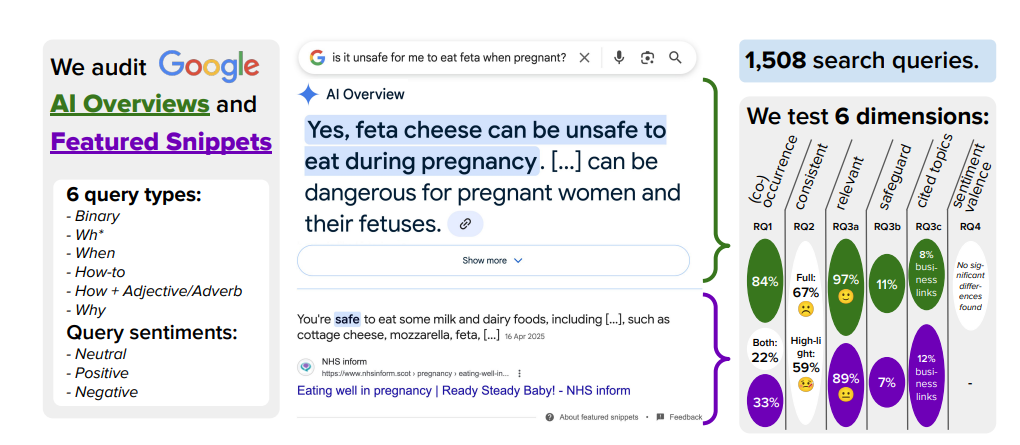

The Three Types of Contradictions

The researchers identified three flavors of chaos:

1. Binary Contradictions (2.6% of cases)

These are the scary ones—direct opposites that can’t both be true.

Example from the study:

- AIO says: « Feta cheese can be unsafe during pregnancy… dangerous for pregnant women and their fetuses »

- Featured Snippet says: « You’re safe to eat some milk and dairy foods, including cottage cheese, mozzarella, feta… »

One says « unsafe, » the other says « safe. » For a pregnant woman, this isn’t just confusing—it’s potentially dangerous. Feta made from unpasteurized milk can contain Listeria, which can cause miscarriage.

2. Numerical Mismatches (23.2% of highlighted answers)

Different timeframes, quantities, or ages that don’t overlap.

Example:

- AIO: « Baby acne typically clears up within a few weeks or months »

- FS: « Baby acne could clear up within a few days to a couple of weeks »

Weeks vs. days vs. months, which is it ? 🤔

These mismatches were particularly common for:

- « How + Adjective/Adverb » questions: 48.7% inconsistency rate

- « When » questions: 62.5% inconsistency rate for highlighted text

3. Other Problematic Mismatches (14.9% of cases)

Different preconditions, risk levels, or interpretations of the same question.

Example:

- AIO: « Yes, babies can sleep with a pacifier from birth—it may reduce SIDS risk »

- Featured Snippets : « Bottle-fed babies can sleep with a pacifier from birth, but breastfed babies should only use one after 3-4 weeks »

Both answers seem positive about pacifiers, but one has critical conditional information the other lacks.

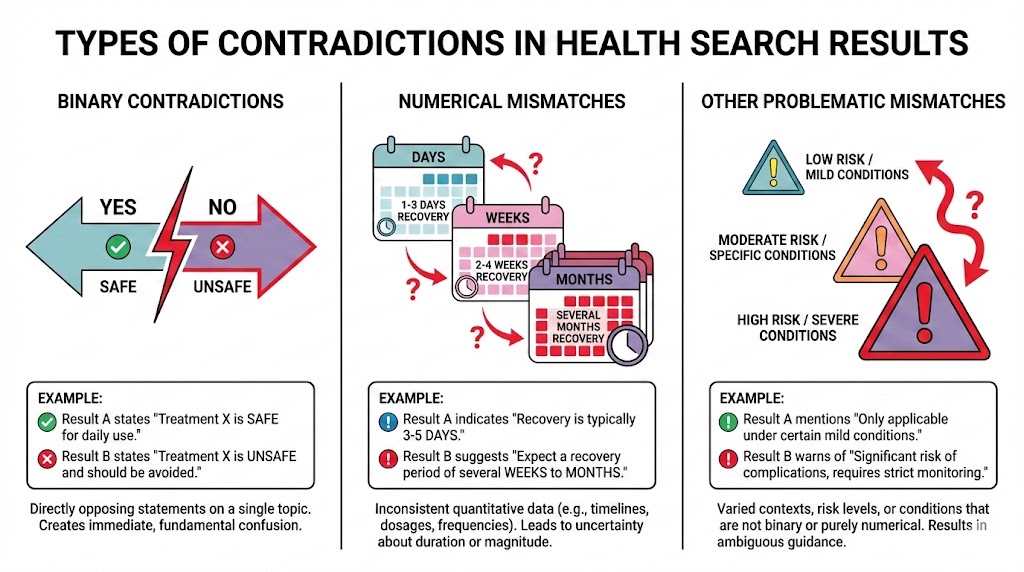

Key Finding #3: Sources Change Based on How You Ask

Remember those 6 question types? They don’t just affect which features appear, they change which sources Google cites.

The Source Breakdown

Overall, the source distribution looks like this:

For AI Overviews:

- Health & Wellness sites: 66%

- Business/Shopping: 7%

- Government: 5%

- Reference (Wikipedia): 1%

For Featured Snippets:

- Health & Wellness sites: 67%

- Business/Shopping: 10% ⚠️

- Government: 6%

- Reference: 1%

Featured Snippets cite commercial sources 43% more often than AI Overviews.

How Question Type Impacts Sources

- « When » questions → Highest commercial source rate (10.8-22.7%) + lowest health sources (45.5-63.1%)

- « Why » questions → Lowest commercial sources (0-1.5%)

The Credibility Problem

The researchers manually reviewed the top 10% most-cited domains. Their findings:

- High credibility (CDC, NHS, WHO, medical journals): Clear minority

- Medium credibility (Healthline, commercial health media): Significant presence

- Low credibility (e-commerce, social media): Present in both AIO and FS

Nearly half of citations in AI Overviews and Featured Snippets come from low to medium credibility sources.

Key Finding #4: Where Are the Medical Disclaimers?

You know those « consult your doctor » warnings you see sometimes? Yeah, they’re rare.

Safeguard cue presence:

- AI Overviews: 11% have explicit or implicit warnings

- Featured Snippets: 7%

For context: This is health information about pregnancy and infants. High-stakes doesn’t begin to describe it.

The study found that « binary » questions (yes/no) were most likely to include safeguards, but even then, coverage was spotty.

The pattern: Question type significantly affects safeguard presence in AI Overviews (p < 0.001), but sentiment doesn’t (p = 0.095).

This means: How you phrase the question matters more than the emotional tone of your query.

The Suppressed AI Overviews: What Google Hides from You

Here’s something most SEOs don’t know: Google generates AI Overviews that you never see.

The researchers discovered 157 AI Overviews that existed in the page’s HTML code but were deliberately hidden from users’ view.

Wait, What Does « Existed in the HTML » Mean?

Let me explain this clearly because it’s fascinating (and slightly concerning).

When you search on Google, the page you see is rendered from HTML code that your browser receives. The researchers scraped this HTML and discovered something unusual:

For some queries, Google’s servers generated a complete AI Overview — the full text, the sources, the formatting — but then applied CSS styling to hide it from being displayed visually.

Think of it like this:

- Google’s AI writes an answer ✅

- Google decides « actually, we shouldn’t show this » ❌

- The answer is technically there in the code, but invisible to users

How They Found These Hidden AI Overviews

The researchers built a custom parser that:

- Checked for AIO structural elements in the HTML (container elements, identifiers)

- Analyzed CSS styling attributes to determine visibility

- Classified AIOs as « visible » or « suppressed » based on whether they had display properties that would hide them from view

This is advanced scraping work — they weren’t just looking at what Google shows users, they were examining the underlying code structure.

Why This Matters: Suppressed AIOs Are Worse Quality

Here’s where it gets interesting. The suppressed AI Overviews weren’t random, they were systematically lower quality:

Relevance problems:

- 16% of suppressed AIOs had low relevance to the query

- Compare that to only 1% of visible AIOs

Consistency problems:

- Only 31% of suppressed AIOs were consistent with Featured Snippets when both appeared

- Compare that to 67% of visible AIOs

Translation: Google’s suppression filter is catching AI Overviews that would have provided contradictory or irrelevant information.

The Controversial Content Pattern

Through qualitative analysis, the researchers noticed a pattern in what got suppressed:

Common themes in suppressed AI Overviews:

- Pro-life / anti-abortion content (fuck those people btw)

- Anti-vaccination information

- Other medically controversial topics

This suggests Google has implemented some form of content policy filtering for AI Overviews that goes beyond just relevance scoring.

The SEO Implications

This finding reveals several important things:

1. Google knows AI Overviews have quality problems

Otherwise, why build a suppression system? They’re generating answers, evaluating them, and deciding « nope, not good enough to show. »

2. The filter isn’t perfect

Remember: 33% of visible AIOs still contradict Featured Snippets. Google’s catching the worst offenders, but plenty of problematic content still makes it through.

3. Topic sensitivity matters

If your content touches on controversial health topics (abortion, vaccines, alternative medicine), Google may be more likely to suppress AI Overviews that cite you — even if your information is accurate.

4. There’s a quality threshold we can’t see

Google has internal criteria for « showable vs. suppressible » AI Overviews. As SEOs, we’re optimizing blind without knowing what triggers suppression.

The Technical Question: Why Generate Then Hide?

You might wonder: Why generate an AI Overview at all if you’re just going to hide it?

Good question. A few possible explanations:

Theory 1: Post-generation filtering

Google’s AI generates the answer first, then a separate quality filter evaluates it. If it fails the quality check, it gets suppressed.

Theory 2: A/B testing and data collection

By generating answers in the HTML (even if hidden), Google can:

- Collect data on which answers would have been shown

- Test different suppression thresholds

- Analyze failure patterns to improve the system

Theory 3: Serving architecture

It might be technically easier to generate all AIOs and hide bad ones rather than predict quality before generation.

Whatever the reason, the takeaway is clear: Google’s AI Overview system is less confident than it appears.

For every 10 AI Overviews you see, there might be 1-2 more that Google generated but chose to suppress because they didn’t meet quality standards.

And if 157 out of 1,508 queries (~10%) had suppressed AIOs in this study… that’s a significant failure rate for a production system.

What’s Next?

Google’s AI Overviews are here to stay, appearing in 84% of searches and dominating the SERP real estate that Featured Snippets used to own.

But as this study shows, we’re still in the messy early days:

- Contradictory information on the same page (33% of the time)

- Inconsistent source quality (nearly half are medium/low credibility)

- Missing medical disclaimers (89% of AIOs provide zero warnings)

- Hidden AI Overviews that Google generates but won’t show you

As SEO professionals working in YMYL niches, we need to understand these dynamics. Not just to rank better, but to ensure the content we create actually helps people rather than adding to the confusion.

Resources and Further Reading

📄 Full study paper: Auditing Google’s AI Overviews and Featured Snippets: A Case Study on Baby Care and Pregnancy – University of Zurich, 2025

What’s your experience with AI Overviews in your niche?

Have you noticed contradictions between AIO and Featured Snippets? Are you seeing different source categories depending on query phrasing?

Drop your observations below—this is a rapidly evolving space, and real-world data from different verticals will help all of us navigate it better.

And if you found this useful, save this post. You’ll want to reference these findings when planning your 2025 content strategy.

Ian Sorin is an SEO consultant at Empirik, a digital marketing agency based in Lyon, France. He keeps a close eye on research papers about SEO / GEO content. He builds tools to automate the tedious parts of SEO, runs experiments on his own projects, and digs into research to stay ahead of how AI is reshaping search.